Hello all, I'm back with even more data!

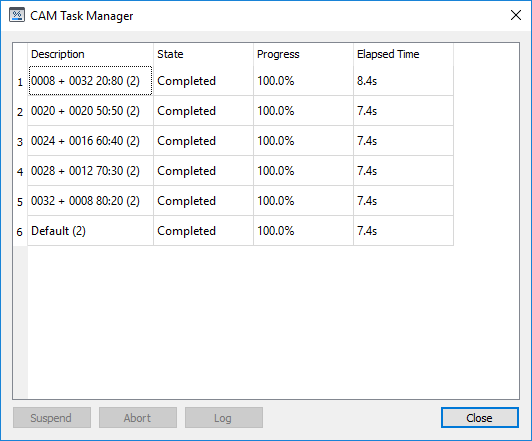

This time I recorded the generation time with the Task Manager inside Fusion so we could see how our changes affect the generation time. Now the Task Manager doesn't really respect the "manual start" or "1 consecutive task" options. So to be accurate, I generated each of the 13 operations 1 by 1, twice, so that each operation would have the same resources available to it. Overall, the results were consistent.

I also upgraded my excel graph making abilities to make the graphs look a little nicer, and added data labels to help compare everything a little better.

I also ran both these tests with the same exact part from the last post, so the file sizes between the last post and this one can be compared to each other.

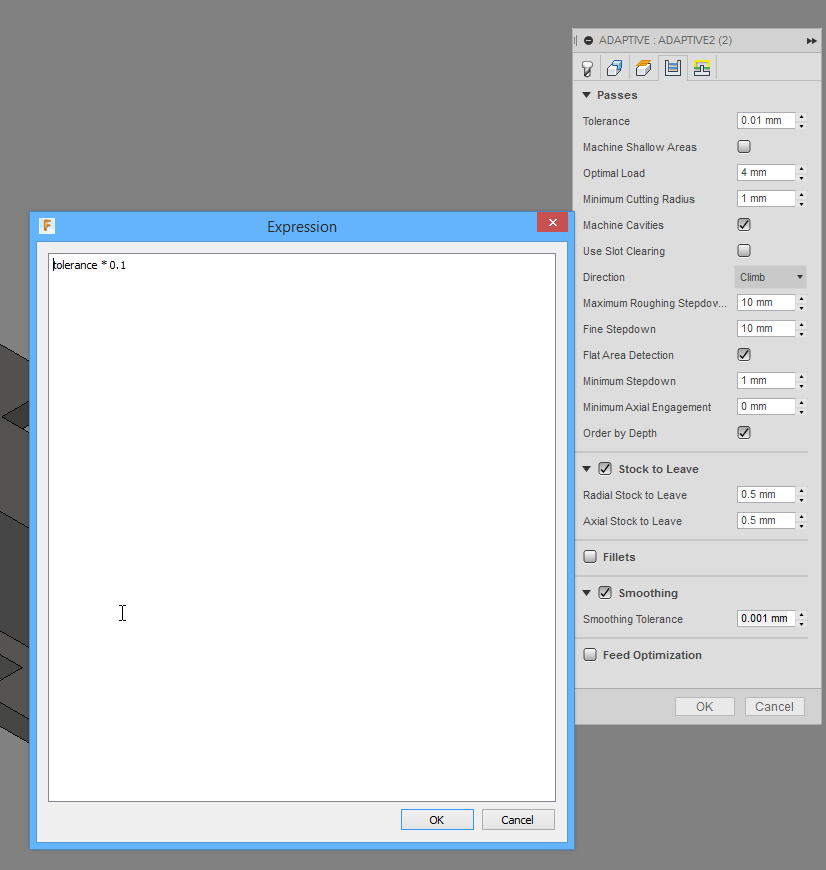

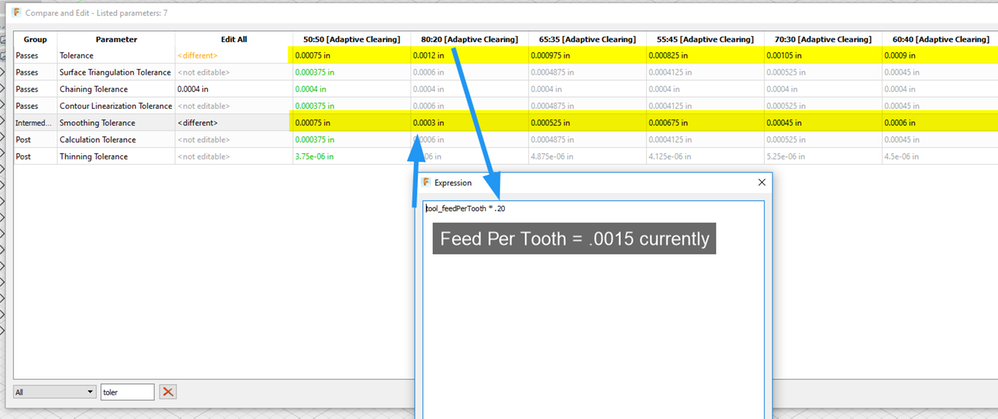

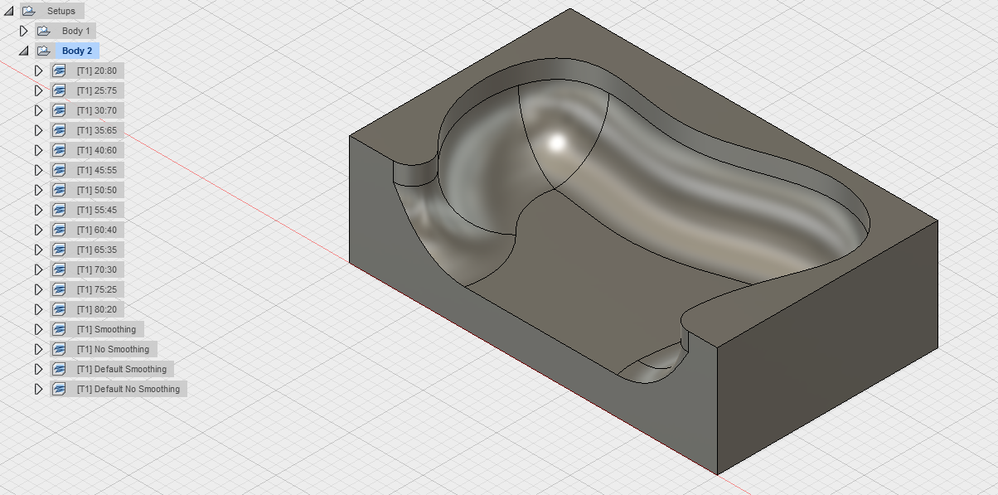

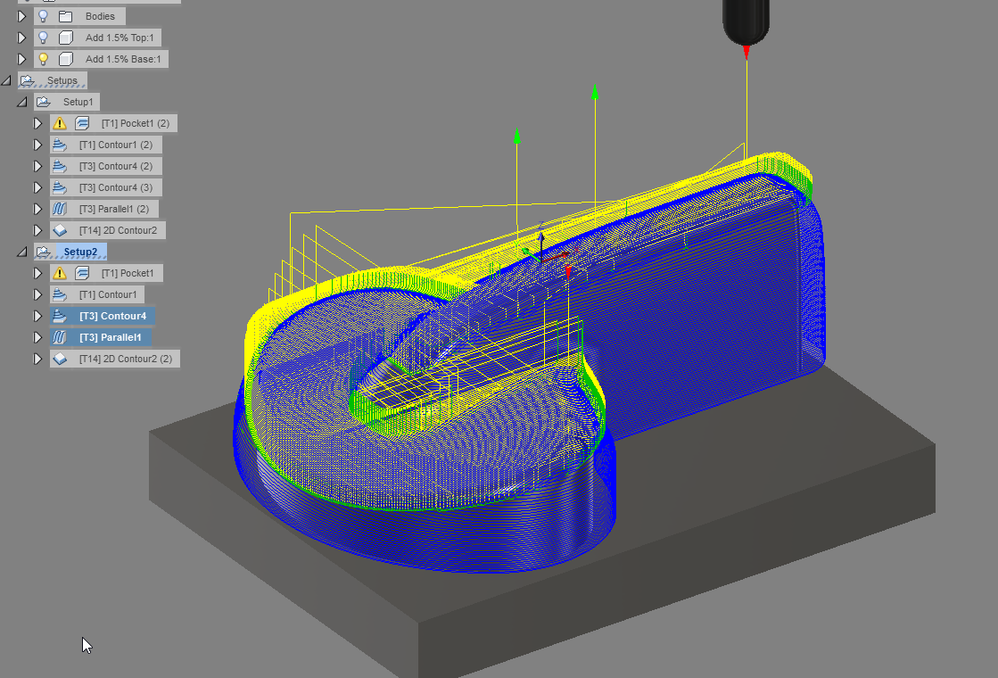

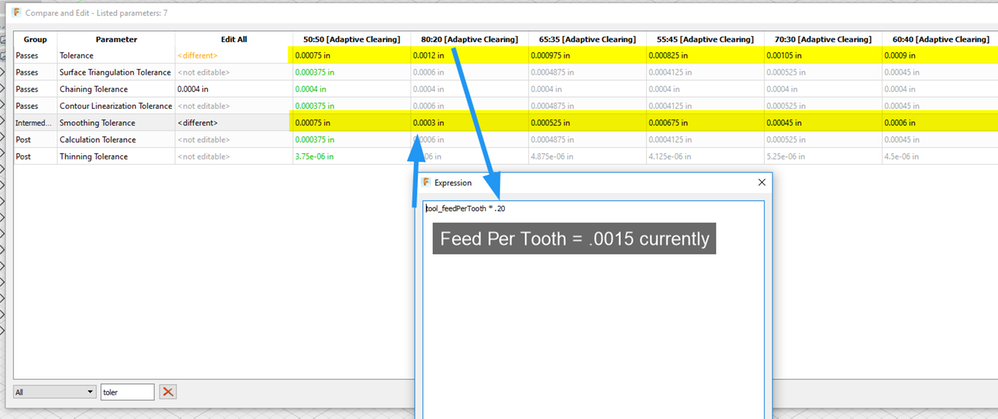

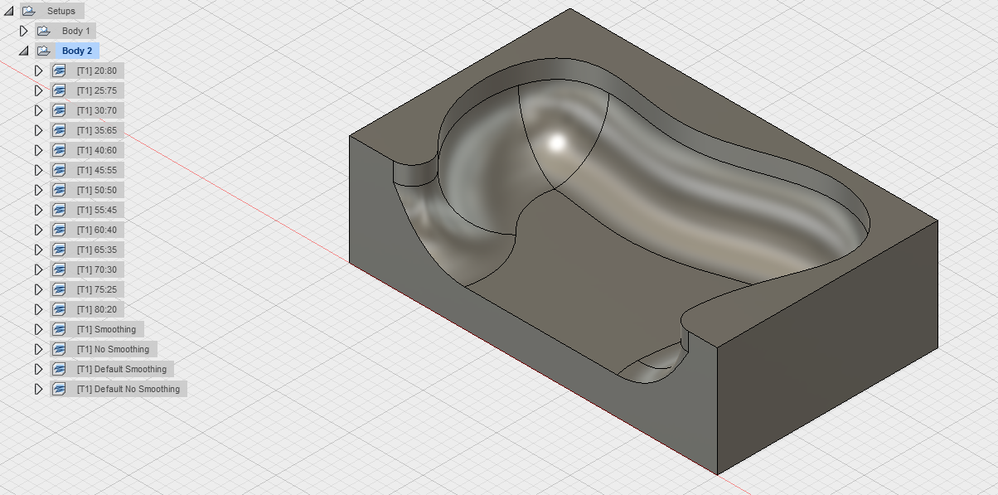

Lastly, since I figured I might be doing more of these tests I created a CAM setup that uses the "Feed Per Tooth" value as the Total Tolerance for each operation. Each of the 13 operations uses expressions to instantly calculate new tolerance and smoothing values from the Total Tolerance value.

Here is how I currently have the CAM setup for this:

Editing 17 operations was a long process before

Ok enough of the boring stuff, let's get to the data!

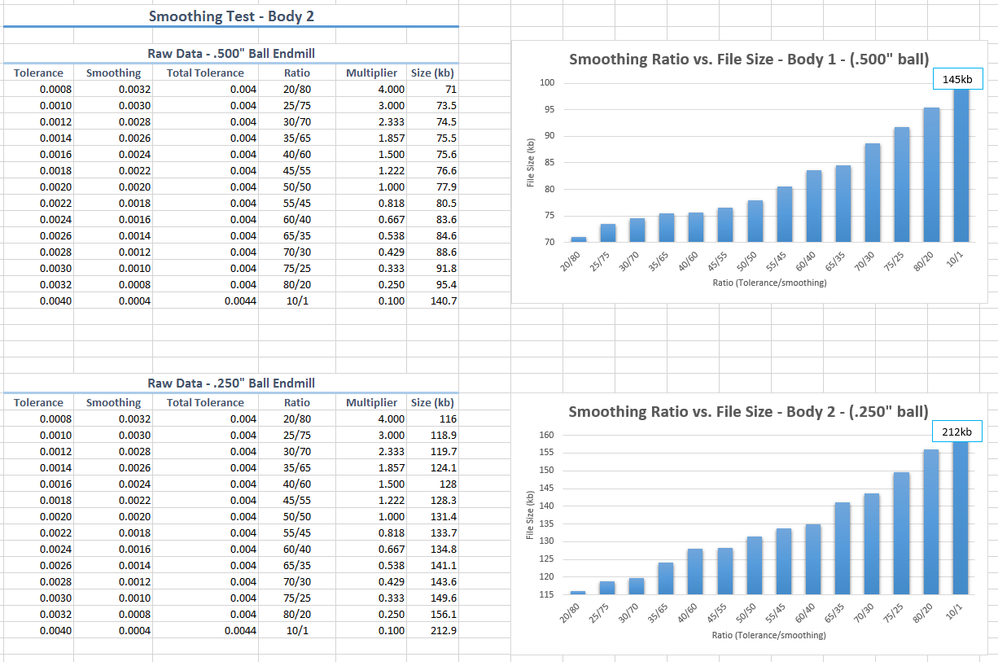

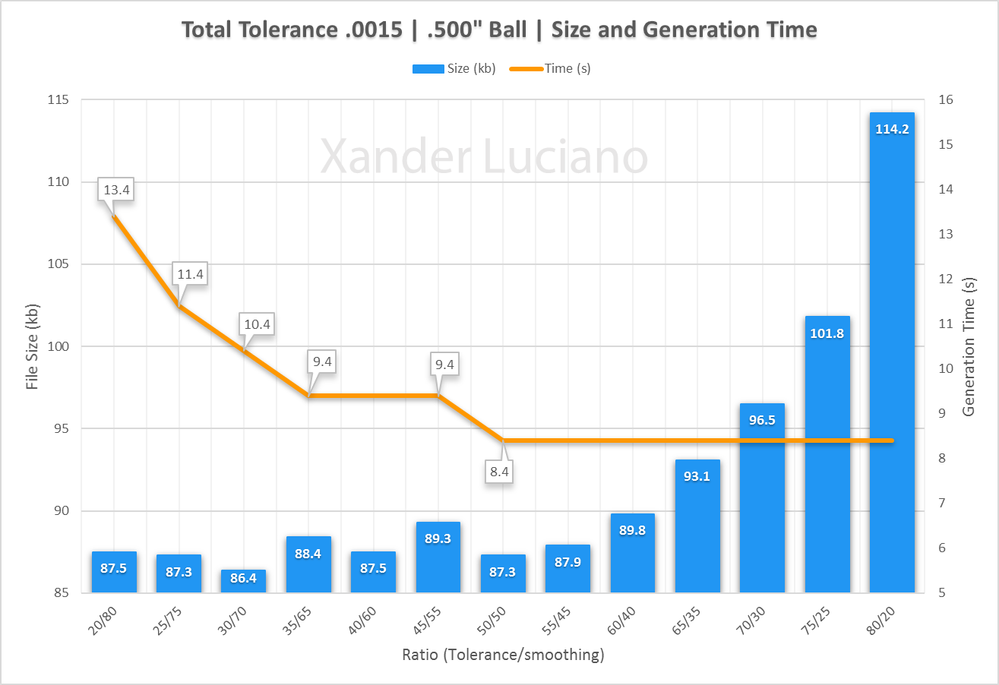

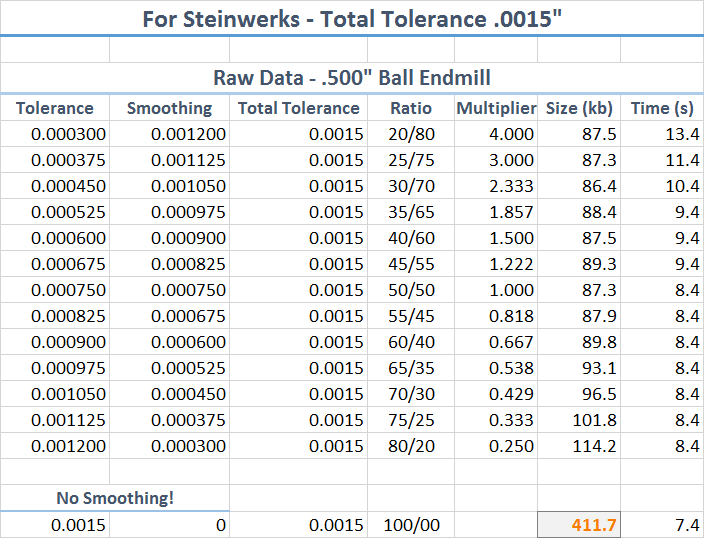

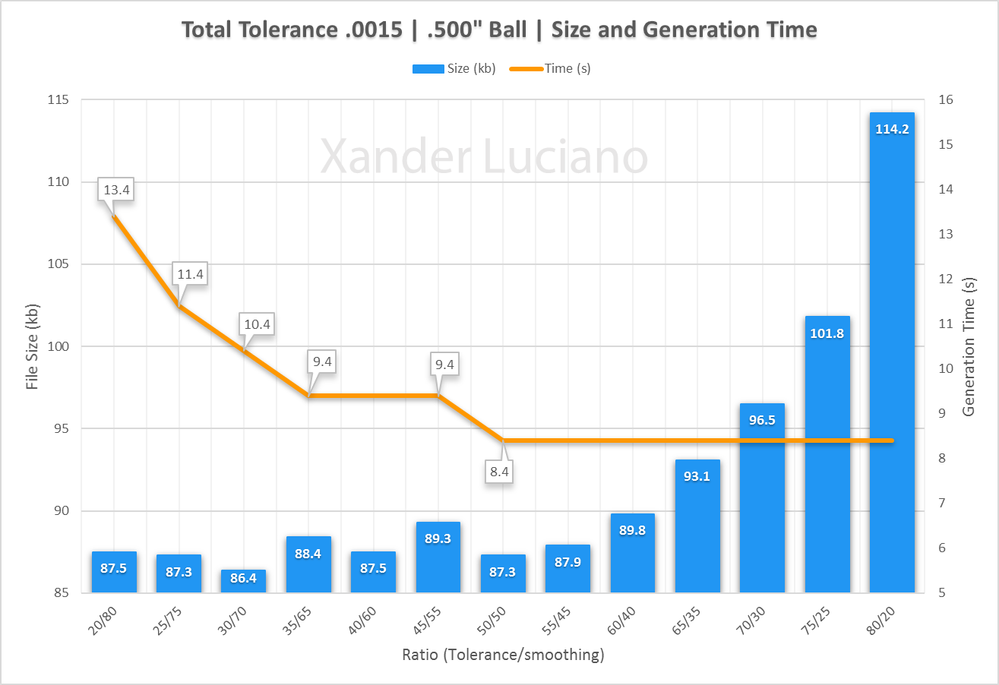

0.0015" Total Tolerance | 0.500" Ball Endmill

The Results:

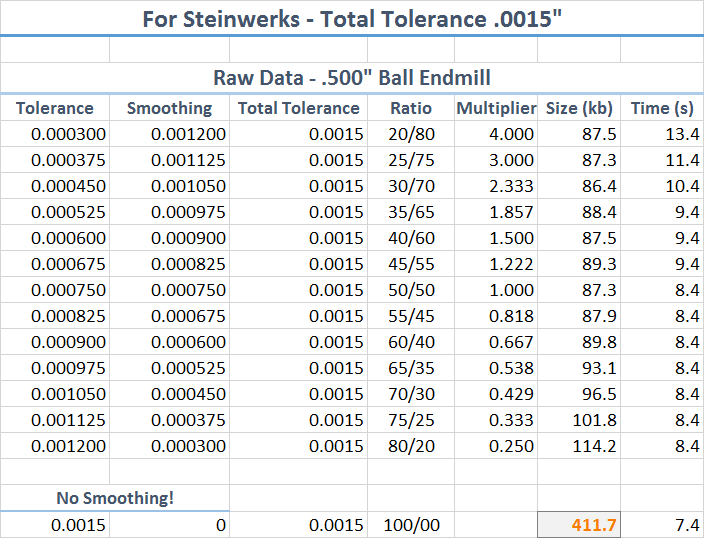

Raw Data:

Even with the tighter tolerance, we are still seeing a very clear decrease in file size - all the way until the 50/50 ratio! We also don't have any noticeable difference in toolpath generation time until then either! Compared to the toolpath without smoothing, there is very little noticeable difference.

Onto the next data set!

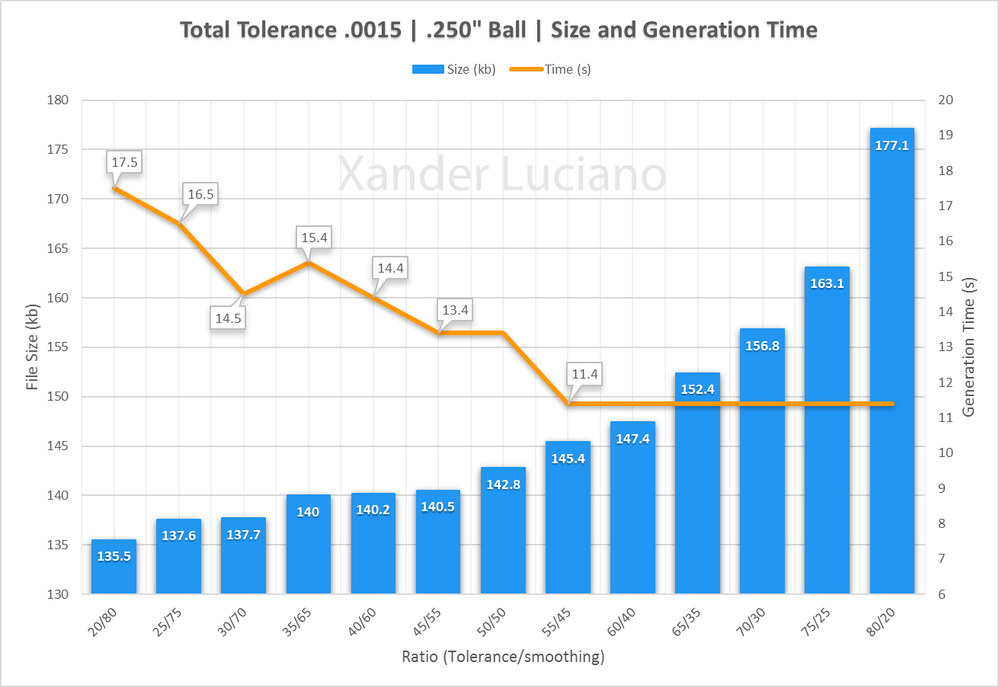

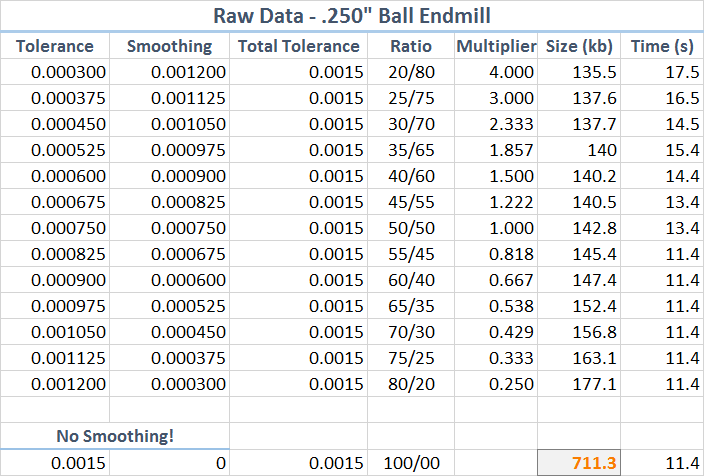

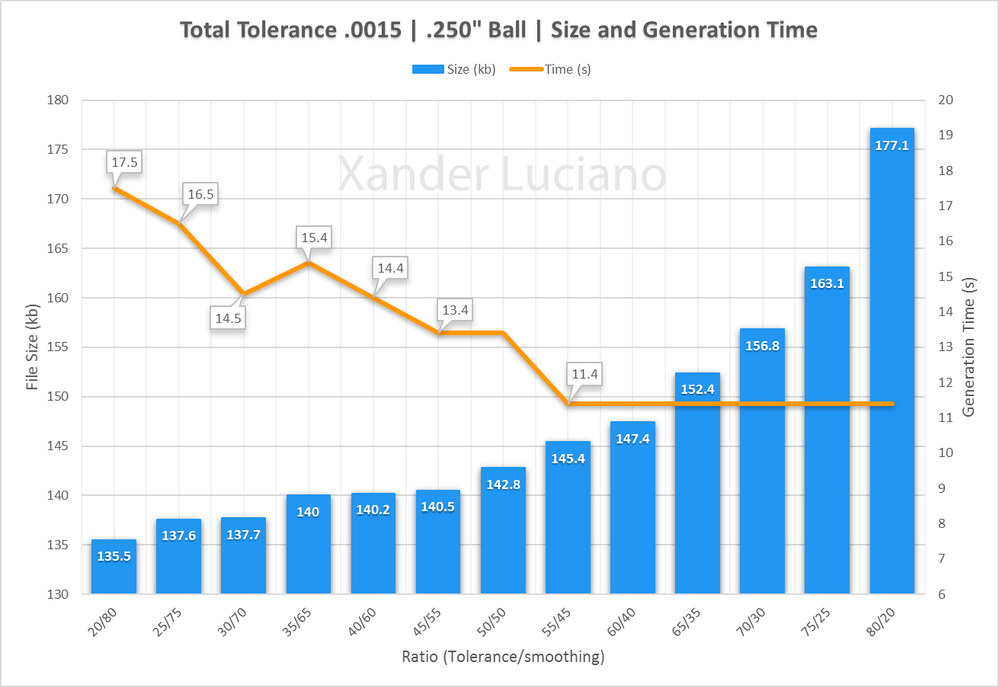

0.0015" Total Tolerance | 0.250" Ball Endmill

Is that not a perfect graph? With the .250" requiring more passes we starting seeing some really nice smoothed out data. Once again we see noticeable reductions in file size all the way to the 50/50 ratio, where we start getting diminishing returns, and increased toolpath generation time.

Again, we see a steady generation time up to the 50/50 ratio, where it then starts increasing.

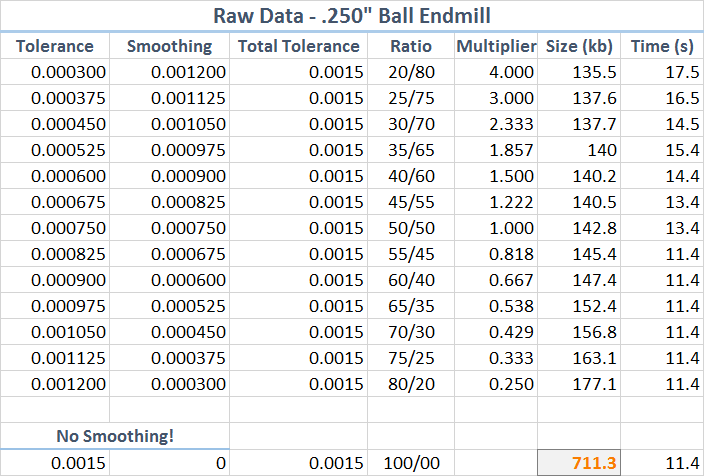

Raw Data:

Let's just look at the size of that file without smoothing. Yep. 711.3kb, that is 4 times the size of the 80/20 toolpath! We're seeing a massive increase between not using smoothing and using smoothing.

Conclusion

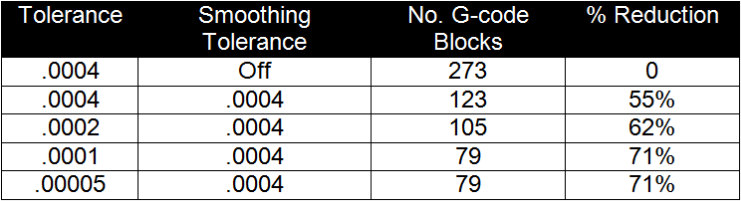

From the original article I linked, they recommended that you have your tolerance be 1 to 4 times greater than your smoothing value. Well 1 times is the 50/50 ratio, and 4 times is the 80/20 ratio.

So my recommendation now, start with the total acceptable tolerance.

Consider the accuracy of your machine, and the tolerance requirements on your parts, and how fast your machine can process data.

Strategically create your toolpaths to take advantage of smoothing. Smoothing only works on the XY/XZ/YZ planes so do as much roughing as you can along those planes.

- Use Adaptive clearing with stock to leave. This way you can increase your Total Tolerance and use a low smoothing ratio (ex: 50/50 to 65/35 smoothing ratio)

- Use Parallel and Contour finishing strategies - they move along the XY/XZ/YZ planes by default and can take advantage of smoothing

When in doubt, using even the largest smoothing ratio will make a noticeable difference in large toolpaths such as adaptive clearing.

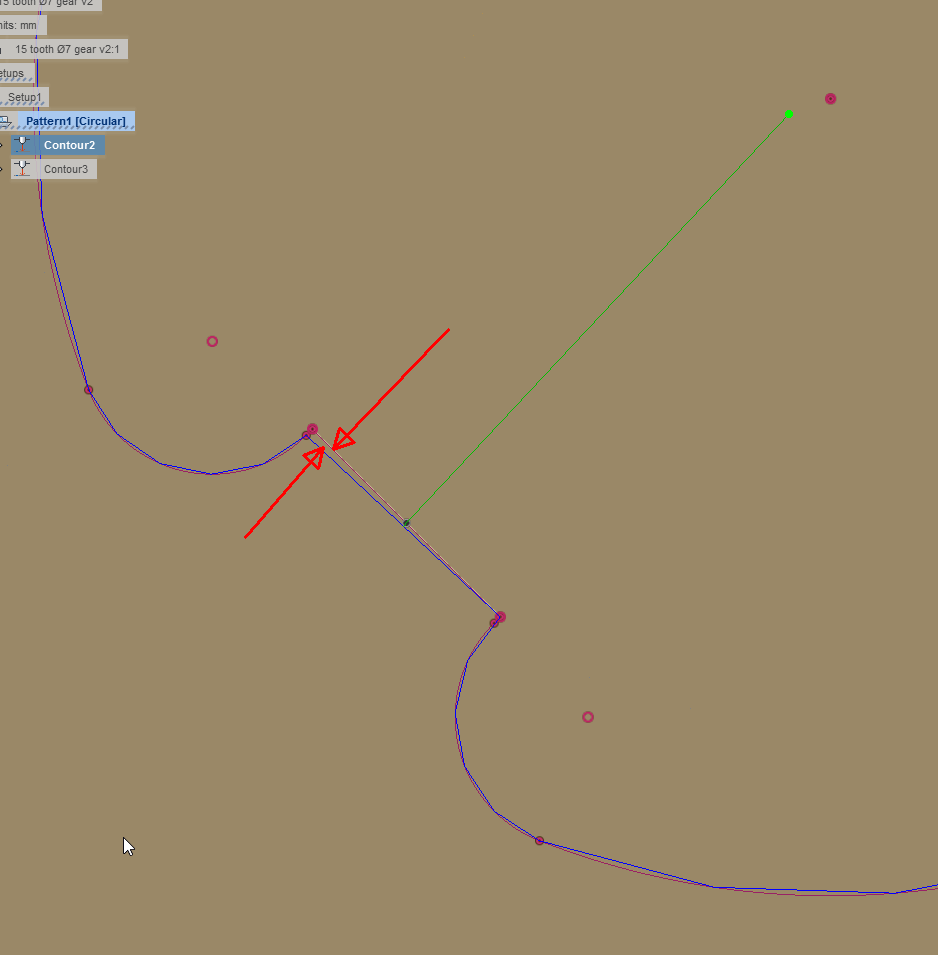

Lastly, I still need to look into that contouring issue, I wanted to nail down as much hard data as I could.

Thoughts on the new findings guys? What do you think of smoothing now? I'm definitely starting to understand it's effect now and getting a better feel for the cause/effect of the settings.

What else would you guys like to see now?

@Steinwerks @daniel_lyall @HughesTooling @LibertyMachine <- Hey, you changed usernames

And I'm gunna call a few other guys into this thread who I think might appreciate the info.

@RandyKopf @bmxjeff @Steven.Shaw

Also, don't be afraid to fact check me on this stuff. There is a lot of data to input and sort with these.

Thanks for the input guys!

- Xander Luciano

Kudo button - If it resolves your issue, press Accept as Solution!

Kudo button - If it resolves your issue, press Accept as Solution!